SQLizer is great at converting text based files into SQL scripts. It can handle files that use almost any delimiter and can handle some very large files.

CSV files usually use commas to spearate the values in each column, like this:

ID,Values

1,foo

2,bar

3,bazBy default SQLizer will assume that a file with a .csv extension is using commas to separate its values.

However, another common delimiter is the semicolon, like this:

ID;Values

1;foo

2;bar

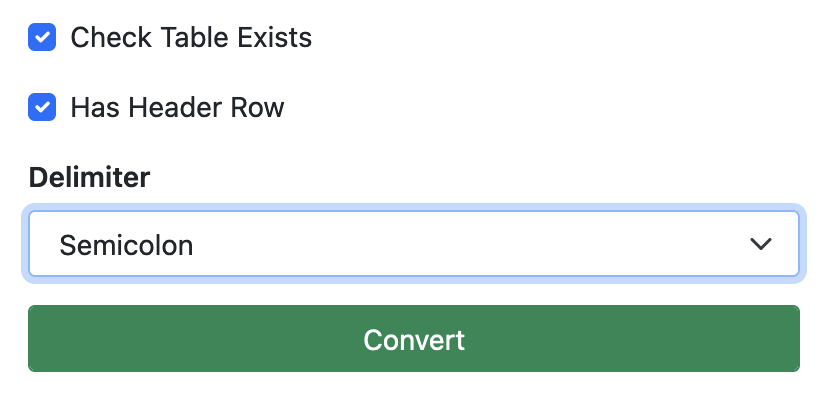

3;bazYou can tell SQLizer to use semicolons as the delimiter using the "Delimiter" dropdown in the user interface:

Or by setting the Delimiter property when using the API.

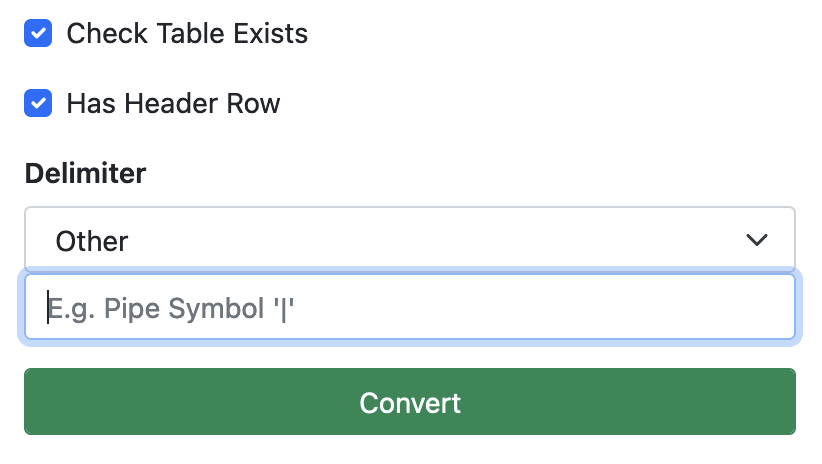

If your file has some kind of delimiter that is not one of the options in the SQLizer user interface, e.g. the pipe character "|", you can select "Other" and enter the type of delimiter used:

Using this feature, you can convert almost any line based text file using SQLizer such as tab separated files, or any text files that have a regular delimiter in them, regardless of its file extension.

CSV files sometimes use quotes to enable the text values to contain commas (or any other delimiter characters) without them being interpreted as separate values. SQLizer will automatically detect this and handle it appropirately:

"ID","Values"

"1","foo"

"2","bar,baz"

"3","buzz"This will be converted into:

CREATE TABLE IF NOT EXISTS csv_quotes (

`ID` INT,

`Values` VARCHAR(7) CHARACTER SET utf8

);

INSERT INTO csv_quotes VALUES

(1,'foo'),

(2,'bar,baz'),

(3,'buzz');IF NOT EXISTS (

select * from sysobjects where name='csv_quotes' and xtype='U'

) CREATE TABLE csv_quotes (

[ID] INT,

[Values] NVARCHAR(7)

);

INSERT INTO csv_quotes VALUES

(1,N'foo'),

(2,N'bar,baz'),

(3,N'buzz');CREATE TABLE IF NOT EXISTS "csv_quotes" (

"ID" INT,

"Values" TEXT

);

INSERT INTO "csv_quotes" VALUES

(1,'foo'),

(2,'bar,baz'),

(3,'buzz');CREATE TABLE IF NOT EXISTS csv_quotes (

ID INTEGER,

Values TEXT

);

INSERT INTO csv_quotes VALUES

(1,'foo'),

(2,'bar,baz'),

(3,'buzz');When quotes are used, double quotes will be interpreted as a quote within the value:

"ID","Values"

"1","foo"

"2","""bar"""

"3","baz"This will be converted into:

CREATE TABLE IF NOT EXISTS csv_quotes2 (

`ID` INT,

`Values` VARCHAR(5) CHARACTER SET utf8

);

INSERT INTO csv_quotes2 VALUES

(1,'foo'),

(2,'"bar"'),

(3,'baz');IF NOT EXISTS (

select * from sysobjects where name='csv_quotes2' and xtype='U'

) CREATE TABLE csv_quotes2 (

[ID] INT,

[Values] NVARCHAR(5)

);

INSERT INTO csv_quotes2 VALUES

(1,N'foo'),

(2,N'"bar"'),

(3,N'baz');CREATE TABLE IF NOT EXISTS "csv_quotes2" (

"ID" INT,

"Values" TEXT

);

INSERT INTO "csv_quotes2" VALUES

(1,'foo'),

(2,'"bar"'),

(3,'baz');CREATE TABLE IF NOT EXISTS csv_quotes2 (

ID INTEGER,

Values TEXT

);

INSERT INTO csv_quotes2 VALUES

(1,'foo'),

(2,'"bar"'),

(3,'baz');If you have large amounts of data to convert, a CSV file format is the ideal choice from SQLizer's percpective. This is because CSV files are line based (i.e. each line is a separate set of data that does not depend on what came before it in the file) so SQLizer is able to process them very efficiently, only loading a small part of the file into memory at any one time. This is not the case for JSON or XML files where the correct way to interpret each line of the file depends on where it is within the file, or for spreadsheets, which are really just XML files that have been compressed.

There is currently no hard limit on the size of a CSV file that SQLizer can convert. Our upload process is designed to handle some very large files and we sometimes see users processing CSV files that are 100s of GBs in size. In practice your database server's ability to handle the resulting SQL script is probably the limiting factor, although we expect SQLizer would probably fail once you get over a terabyte of CSV data.

Spreadsheets have a size limit of 100MB as spreadsheet files are compressed and will take up considerably more space once decompressed for processing. The limit for JSON / XML files is 250MB. If you attempt to convert a file of those types that is too big, SQLizer will return an error as soon as the upload is complete.